[ad_1] This post was written with Dian Xu and Joel Hawkins of Rocket Companies. Rocket Companies is a Detroit-based FinTech company with a mission to “Help Everyone Home”. With the current housing shortage and affordability concerns, Rocket simplifies the homeownership process through an intuitive and AI-driven experience. This comprehensive framework streamlines every step of the homeownership journey, empowering consumers to search, purchase, and manage home financing effortlessly. Rocket integrates home

[ad_1] Providing effective multilingual customer support in global businesses presents significant operational challenges. Through collaboration between AWS and DXC Technology, we’ve developed a scalable voice-to-voice (V2V) translation prototype that transforms how contact centers handle multi-lingual customer interactions. In this post, we discuss how AWS and DXC used Amazon Connect and other AWS AI services to deliver near real-time V2V translation capabilities. Challenge: Serving customers in multiple languages In Q3 2024,

[ad_1] Foundational models (FMs) and generative AI are transforming how financial service institutions (FSIs) operate their core business functions. AWS FSI customers, including NASDAQ, State Bank of India, and Bridgewater, have used FMs to reimagine their business operations and deliver improved outcomes. FMs are probabilistic in nature and produce a range of outcomes. Though these models can produce sophisticated outputs through the interplay of pre-training, fine-tuning, and prompt engineering, their

[ad_1] Formula 1® (F1) races are high-stakes affairs where operational efficiency is paramount. During these live events, F1 IT engineers must triage critical issues across its services, such as network degradation to one of its APIs. This impacts downstream services that consume data from the API, including products such as F1 TV, which offer live and on-demand coverage of every race as well as real-time telemetry. Determining the root cause

[ad_1] Cycling is a fun way to stay fit, enjoy nature, and connect with friends and acquaintances. However, riding is becoming increasingly dangerous, especially in situations where cyclists and cars share the road. According to the NHTSA, in the United States an average of 883 people on bicycles are killed in traffic crashes, with an average of about 45,000 injury-only crashes reported annually. While total bicycle fatalities only account for

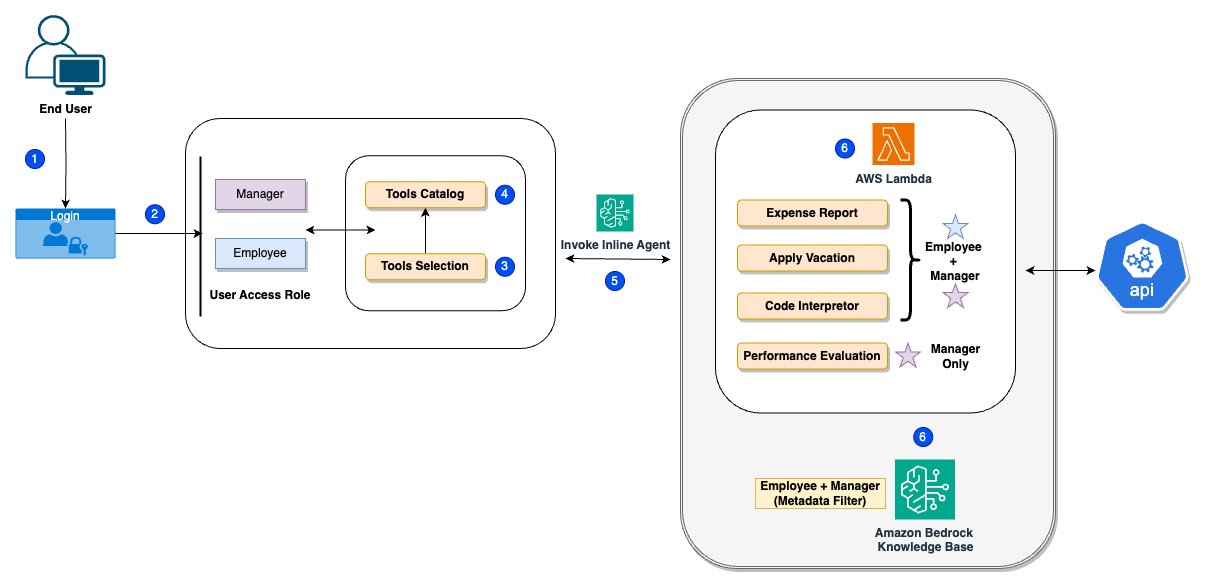

[ad_1] AI agents continue to gain momentum, as businesses use the power of generative AI to reinvent customer experiences and automate complex workflows. We are seeing Amazon Bedrock Agents applied in investment research, insurance claims processing, root cause analysis, advertising campaigns, and much more. Agents use the reasoning capability of foundation models (FMs) to break down user-requested tasks into multiple steps. They use developer-provided instructions to create an orchestration plan

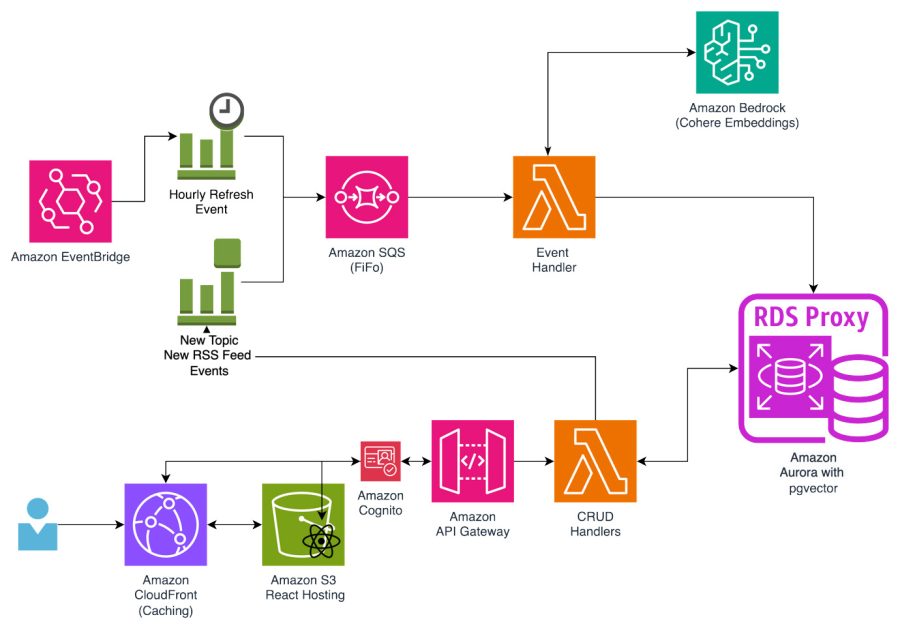

[ad_1] In this post, we discuss what embeddings are, show how to practically use language embeddings, and explore how to use them to add functionality such as zero-shot classification and semantic search. We then use Amazon Bedrock and language embeddings to add these features to a really simple syndication (RSS) aggregator application. Amazon Bedrock is a fully managed service that makes foundation models (FMs) from leading AI startups and Amazon

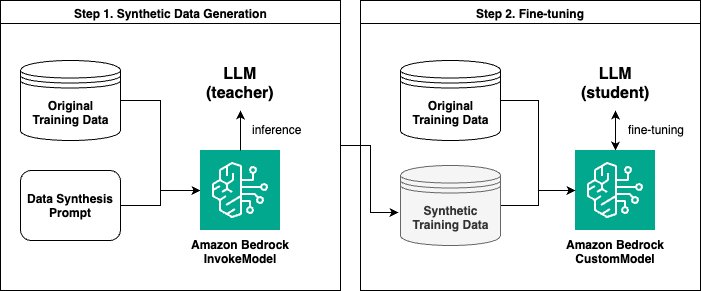

[ad_1] There’s a growing demand from customers to incorporate generative AI into their businesses. Many use cases involve using pre-trained large language models (LLMs) through approaches like Retrieval Augmented Generation (RAG). However, for advanced, domain-specific tasks or those requiring specific formats, model customization techniques such as fine-tuning are sometimes necessary. Amazon Bedrock provides you with the ability to customize leading foundation models (FMs) such as Anthropic’s Claude 3 Haiku and

[ad_1] This blog post is co-written with Moran Beladev, Manos Stergiadis, and Ilya Gusev from Booking.com. Large language models (LLMs) have revolutionized the field of natural language processing with their ability to understand and generate humanlike text. Trained on broad, generic datasets spanning a wide range of topics and domains, LLMs use their parametric knowledge to perform increasingly complex and versatile tasks across multiple business use cases. Furthermore, companies are

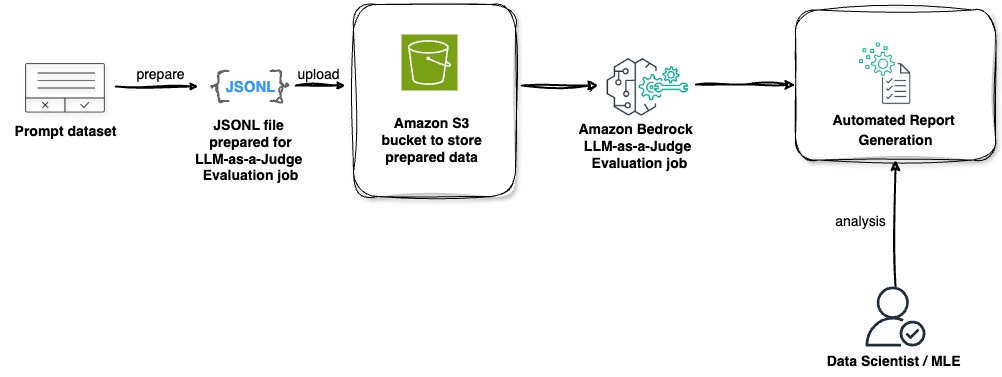

[ad_1] The evaluation of large language model (LLM) performance, particularly in response to a variety of prompts, is crucial for organizations aiming to harness the full potential of this rapidly evolving technology. The introduction of an LLM-as-a-judge framework represents a significant step forward in simplifying and streamlining the model evaluation process. This approach allows organizations to assess their AI models’ effectiveness using pre-defined metrics, making sure that the technology aligns